Technical Post: How we created a blind signatures model to anonymize user API requests

Note: This is a technical article geared for security experts and/or developers. Please see our user-focused blog on Ward Cloak if you are looking for that.

One of the issues with building a security application that requires a high degree of user trust is resistance. Users are savvy, and have every right to be cautious when using your system.

My tool, Ward, is a browser extension that analyzes page content to detect phishing and scams. The challenge: we need to send text to an LLM backend without knowing which user sent it.

Given the track record of data breaches and privacy violations from small companies to large mega-corps over the past decade, it’s crucial to consider privacy as a foundation to your application. In this article, I’ll discuss how we implemented a version of Cloudflare’s Privacy Pass protocol for Python and Web.

Trust is not given; it’s earned.

Our objectives

Log as little user data as possible

Still collect required text inputs (URL, page content, etc.) for our LLM-powered backend

Our objectives were simple: We wanted to log less data. Unfortunately, given that inputs to LLMs are simple text, there was no way to obfuscate this all the way to the point from User API request → LLM input. So we had to get creative.

The solution?

Anonymize all user requests, so we could never cross reference a scan request to a specific user.

Math, Not Promises

We needed a cryptographic solution that when audited could stand up to our goal of being non-reversible.

An easy way would be to give every user the same auth key. But this wouldn’t hold up to attackers who wanted to abuse our system, and would destroy any monetization model. We had to think outside the box.

RSA Blind Signatures

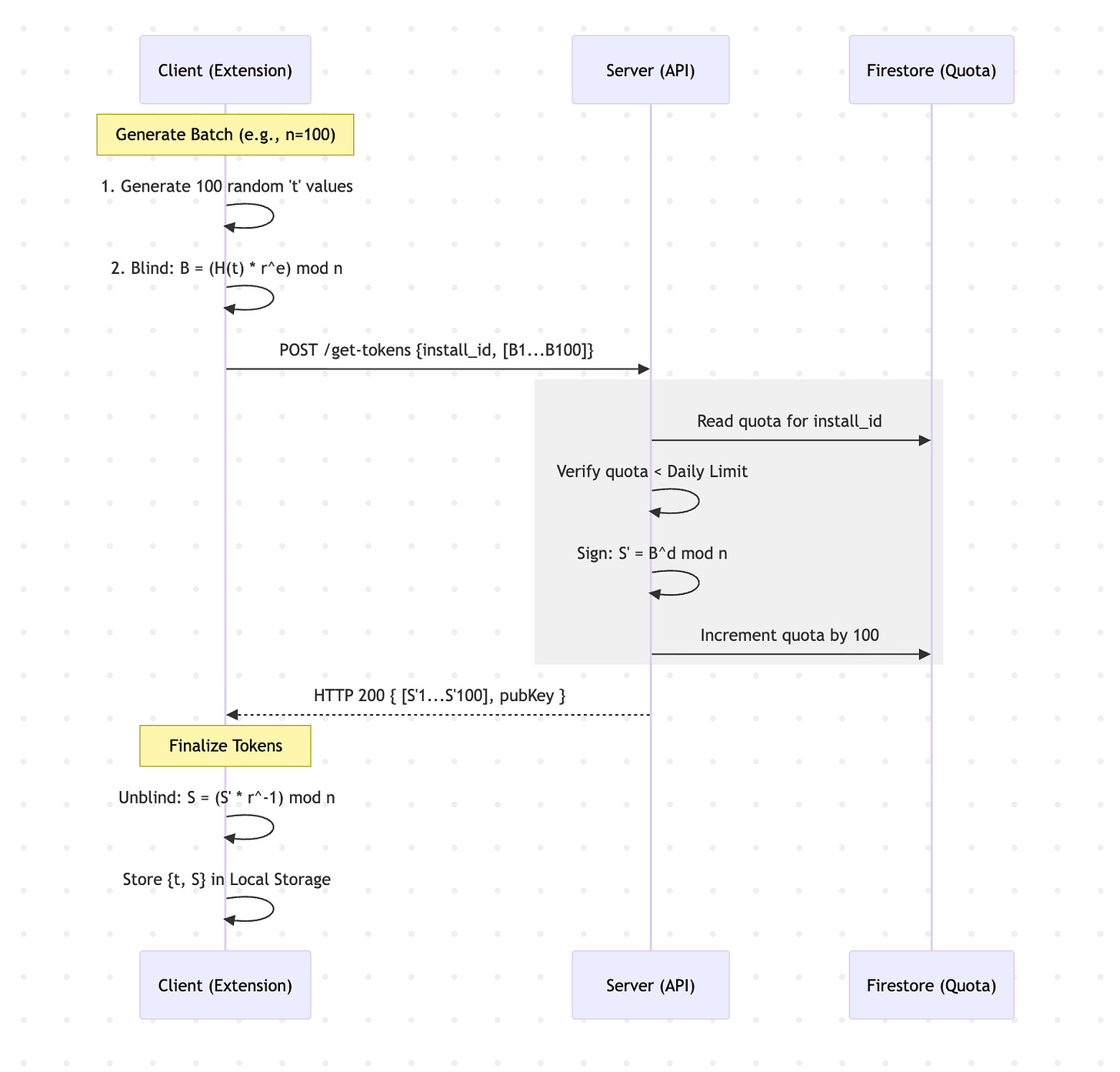

We came up with the following model. It consists of the client requesting a batch of signed tokens at once (100+) which can be redeemed later on anonymously. We use Firestore for storing our quota information for low latency.

Getting batched tokens

Client generates a 256-bit random token t.

Client hashes and pads t to create message m.

Client blinds m with a random factor r.

Client sends blinded token and installId identifier

Server checks installId and quota for issued tokens

Server signs the blinded token with standard RSA and sends to client

Client unblinds token

Client stores newly issued anonymous tokens locally

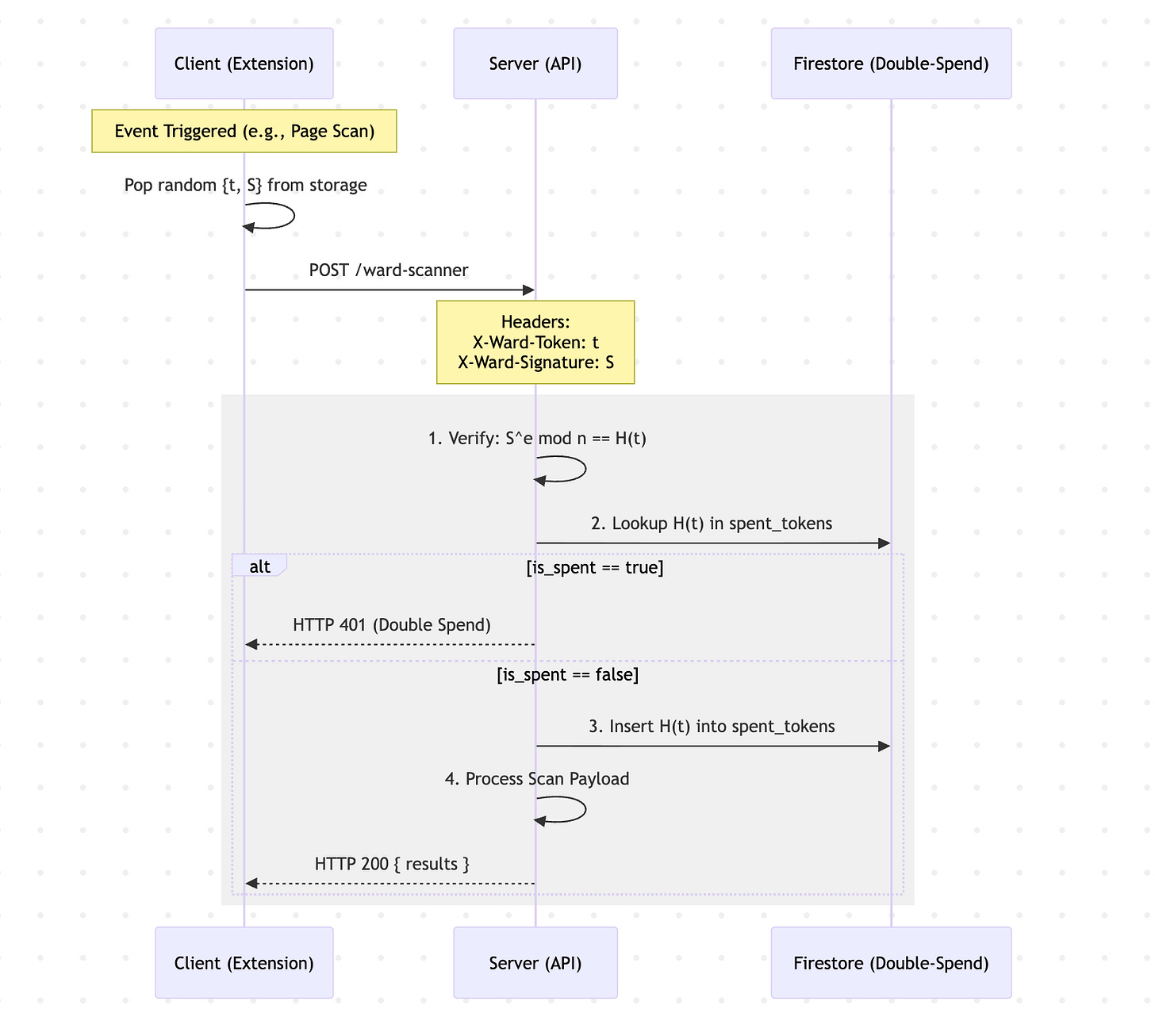

Redeeming tokens

Client sends URL + page content + anonymous token

Server checks token not spent (to avoid a race condition)

Server marks token as spent

Server processes scan and returns result to client.

Libraries

Importantly, we chose to not roll our own crypto implementation, which can introduce flaws if overlooked (we’re not math experts, after all!).

Chrome (JS) → We used the web crpyto API

crypto.subtle.digest, built into the browser which provides SHA-256 hashing out of the box.Backend API (Python) → We used the standard library

cryptographyfor RSA signing.

I’m lost. Where is the mathematical unlinkability?

The blinding factor r is the "blinding factor" that cancels out during unblinding. It’s:

Generated randomly on the client:

crypto.getRandomValues(rBytes)Never sent to the server

Used to unblind locally:

s = s' × r^(-1) mod n

Without r, the server cannot link blinded_message (seen at issuance) to token (seen at redemption).

What if the server stored blinded tokens?

Even if the server stored every blinded token at issuance, they still cannot deanonymize users.

What about side-channel attacks?

We’ve accounted for common attacks by using:

jittered refreshes

batch size fingerprinting

random token usage order usage

We RSA over ECC (Elliptic Curve) for simplicity: Blind RSA is just easier to implement in standard libraries.

Takeaways

Most services on the web are not built to be privacy-first, and use the common one API key per user, or protocols like OAuth2 which are not blind.

This practice enables data collection that is often not needed outside the scope of the service - for advertising, marketing, tracking, and more.

If you’re architecting a new service, think strongly about the privacy guarantees you make to users and whether you can pursue a blind signatures model.

There’s not enough open source user privacy resources out there, especially for Python. If anyone reading this wants to collab, drop me a line!

Resources & Inspiration

https://github.com/cloudflare/blindrsa-ts

This was the inspiration for this model in Ward. Cloudflare has really led the pack on user privacy research recently, and was an excellent reference.

Caution, it’s TypeScript-only.

When will you implement this?

This architecture is standard today in Ward, starting with version 1.2.0 that we released this past week! You can try it out yourself in our extension.

How we’re improving Ward’s privacy going forward

This redesign represents a massive shift forward in Ward’s privacy posture, but there’s still more work to go.

We’re exploring methods such as OHTTP Relays using Cloudflare or Fastly that also will make our backend blind to the user’s network request by routing through a managed proxy service.

We’re tightening our privacy policy to be more transparent and consistent with the data we collect.

We have an exciting announcement coming soon regarding a solution that scans other Chrome extensions for detailed threats, natively integrated into the Ward platform.

Questions?

Are you considering a similar approach? Drop me a comment here or feel free to reach out directly: cedric@tryward.app!